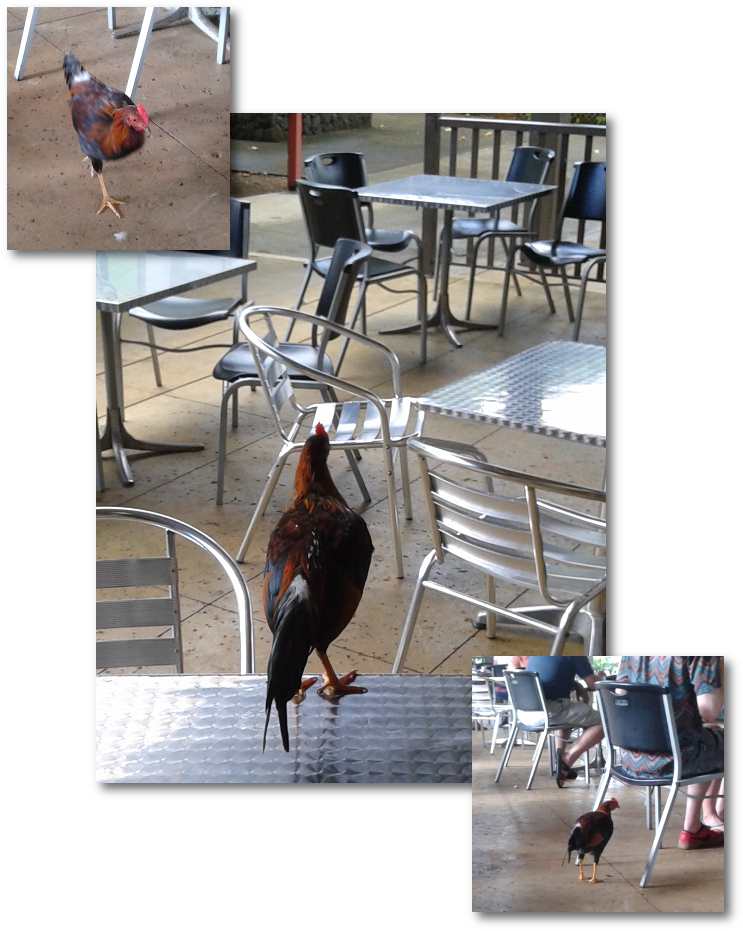

Feral chickens on the Hawaiian Island of Kaua’i are ubiquitous. And they can be aggressive, particularly when they are roaming around common outdoor eating areas.

While the vast majority of visitors to the island honor the signs that say “Don’t Feed the Chickens,” all it takes is a couple of noob’s to put the operant conditioning in motion and keep it going with each new planeload of first-time and unaware visitors.

I watched the consequences of this play out during a recent trip to the island. I was enjoying a cup of coffee and a blueberry scone at The Spot in Princeville. (Side Bar: GO HERE! The food and coffee is FANTASTIC!) A young couple, obviously new to the island, collected their breakfast order at the service window, selected a table, and then went back to the service window to get utensils, napkins, and whatever else. Left unattended for less than 5 seconds, the chickens were on the table and a sizeable rooster had made off with a croissant.

I can only describe the woman’s response as upset and indignant. She promptly returned to the service window and asked – expectantly – for a replacement. To which The Spot employee directed her attention to the sign above the service windows that said, “DO NOT LEAVE FOOD UNATTENDED. Chickens are aggressive and will attack your food if not guarded. WE ARE NOT RESPONSIBLE FOR THE CHICKENS AND CANNOT REPLACE DAMAGED FOOD FOR YOU.”

There are two (at least) lessons to be learned from this. One by the patron and one by the owner of The Spot.

First, the patron. Five bucks (or whatever a croissant costs on Kaui’i) is a very cheap price to pay for a valuable lesson about not just the chickens on Kaua’i, but the very fact that the Rules of Life from wherever your point of origin was have changed. I’ve camped on this island many time over the years and if you are not mindful of the dangers and keep the fact you are not in Kansas any more foremost in your mind, it’s easy to get into trouble. It’s a stunningly beautiful part of the planet with many hidden dangers. Slip and fall on a muddy trail, for example, can be deadly. Lack of awareness of the rip tides and undertows at the beaches can be equally deadly.

So stay alert. Be aware. Read every sign you see. Study what the locals do. Ask questions. And do a little research before traveling to Hawai’i.

For the owner of the Spot, I have this suggestion: The chicken warning sign is written in black marker on brown paper. It’s also placed high in the service window where patrons collect their order. If there is a line out the door, it typically bends away from the service window. Patrons don’t come to the service windows until their name is called. When they do, their natural line of sight is down, looking at the super scrumptious food. It is certain their attention will be drawn away from the warning sign about the chickens (#1 in the picture below) as they focus on gathering their food (#2 in the picture below.)

Fine to keep the sign in the service window, but lower it so there’s a better chance the patrons will see it. Also, put it on white paper. Even better, put a duplicate sign on the inside of the shop viewable from where customers are paying for their food. While they are standing in line waiting to place their order and reading the menu on the wall for the 12th time they are more likely to read the chicken warning sign. Maybe include a cartoon image of a rooster running off with a croissant.

This won’t “fix” the problems the unaware types bring with them onto the island, but I suspect it will cut down on the number of self-entitled noob’s demanding compensation for their lack of awareness. I’d like for The Spot to do everything they can to succeed, which means controlling costs and satisfying customers. I’d also like for the noob’s to gain some self-awareness and take that back home with them.

Win-win.

(I dropped a note to the owners of The Spot with these suggestions. They replied that they liked them and plan to implement these simple changes. If you’re in the area, send me a note, maybe with a picture, as to whether or not the sign has changed. If so, ask if the changes made any difference! Always good to know the results of any experiment.)